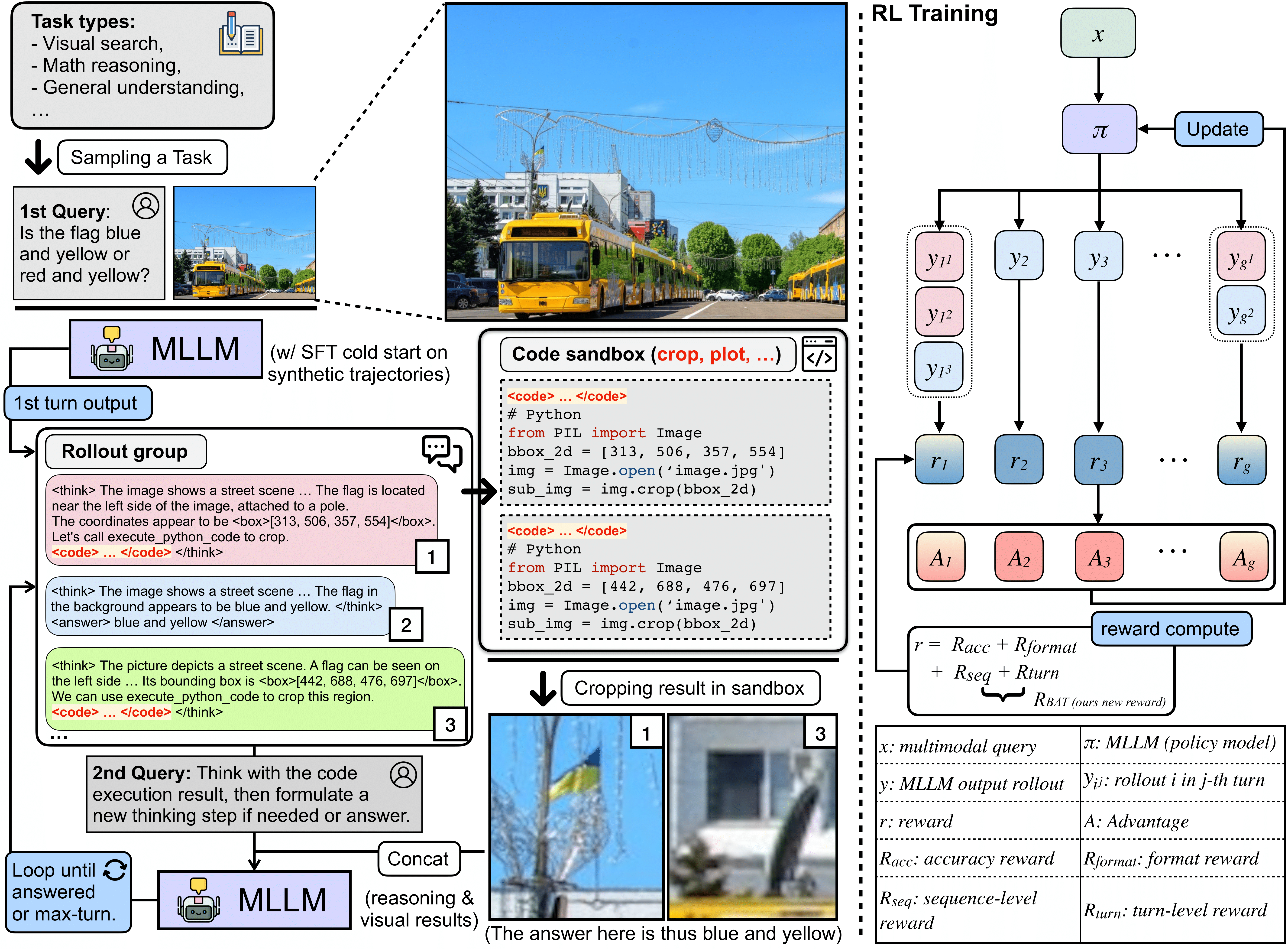

❐ Overview and Training Receipes

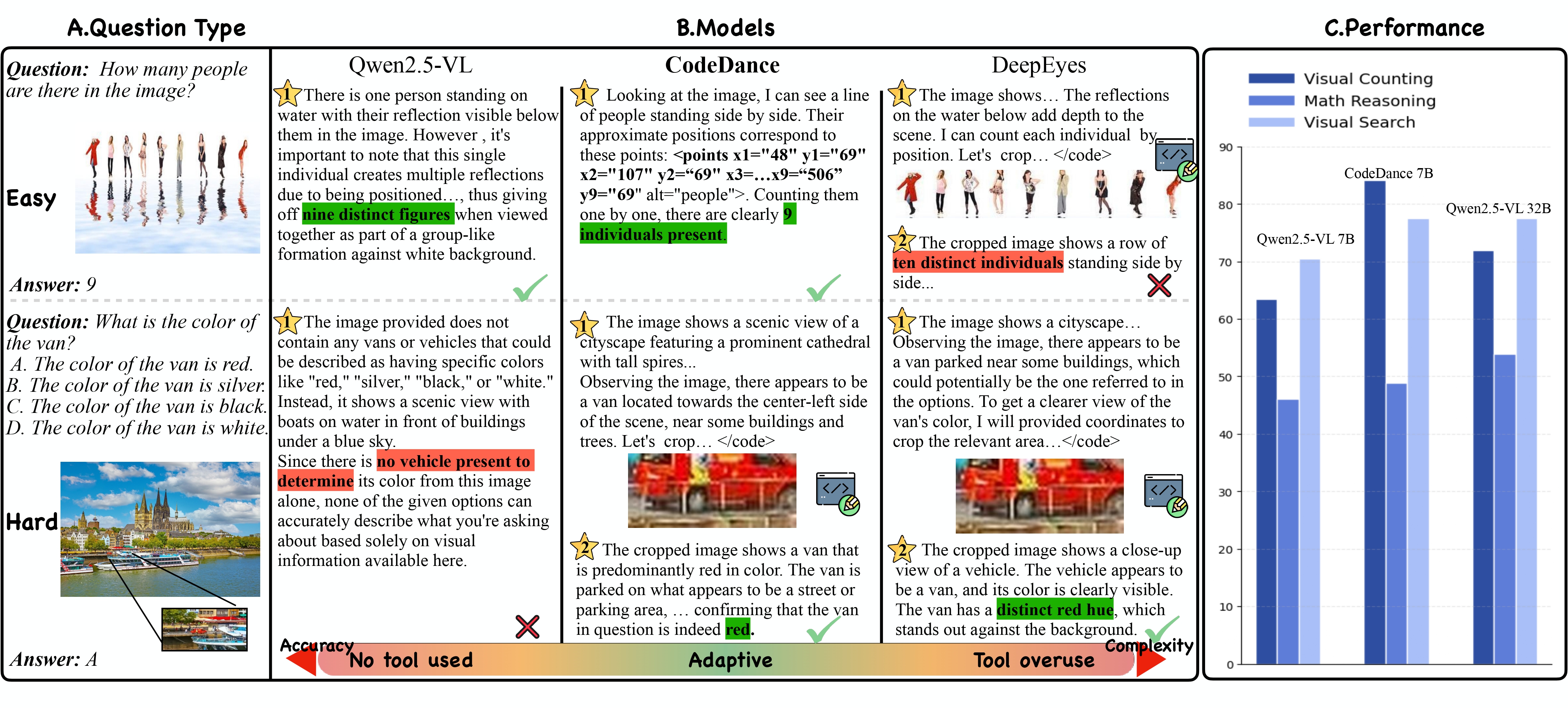

CodeDance is a dynamic tool-integrated MLLM that leverages executable code as a general solver for visual reasoning, enabling a transparent, self-checkable reasoning process.

Our training / inference pipeline consists of three stages:

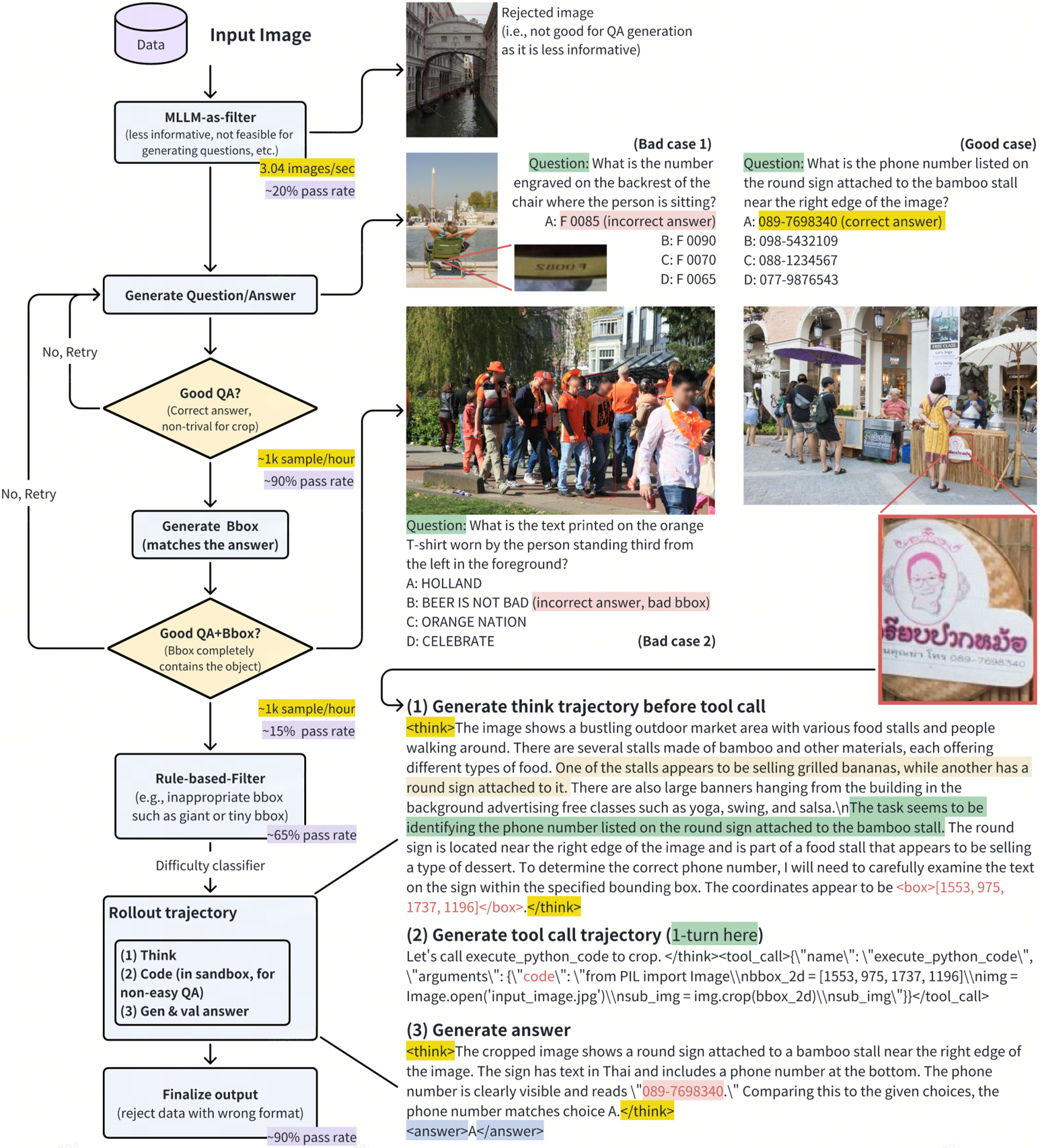

- Stage 1: Cold-start via Supervised Fine-tuning. We construct a 34k high-quality dataset of executable multi-turn trajectories to initialize the model before RL.

- Weak-to-strong filtering. Aggregate public datasets (e.g., SA1B, GEOqa_plus, MMK12) and apply automatic filtering. We employ a weak model (Qwen2.5-VL-7B) to prune trivial cases, while a strong model further stratifies the remaining samples into medium and hard difficulty levels.

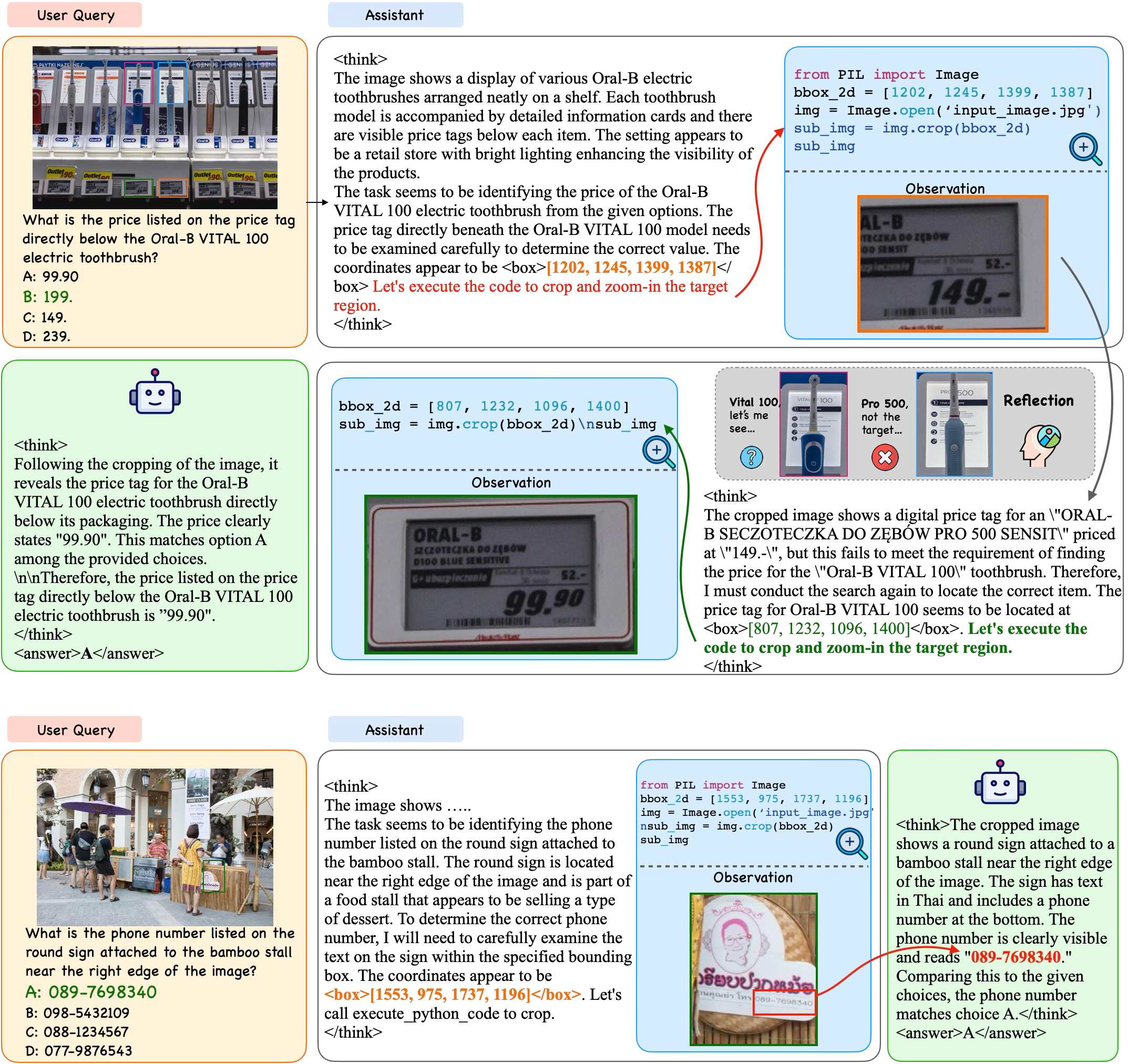

- Multi-turn atomic supervision. We decompose hard cases into verifiable executable trajectories across three atomic categories:

- Predefined visual operations. Annotated via predefined workflows to ensure reproducibility and scalability. Visual outputs (e.g., cropped views) serve as evidence for stepwise reasoning.

- Mathematical computation. Measurement, algebra, aggregation executed via Python/NumPy/SymPy with error filtering. A Reasoning Model first produces chain-of-thought, which is segmented into a multi-step procedure; complex computational steps within the procedure are then translated into executable code.

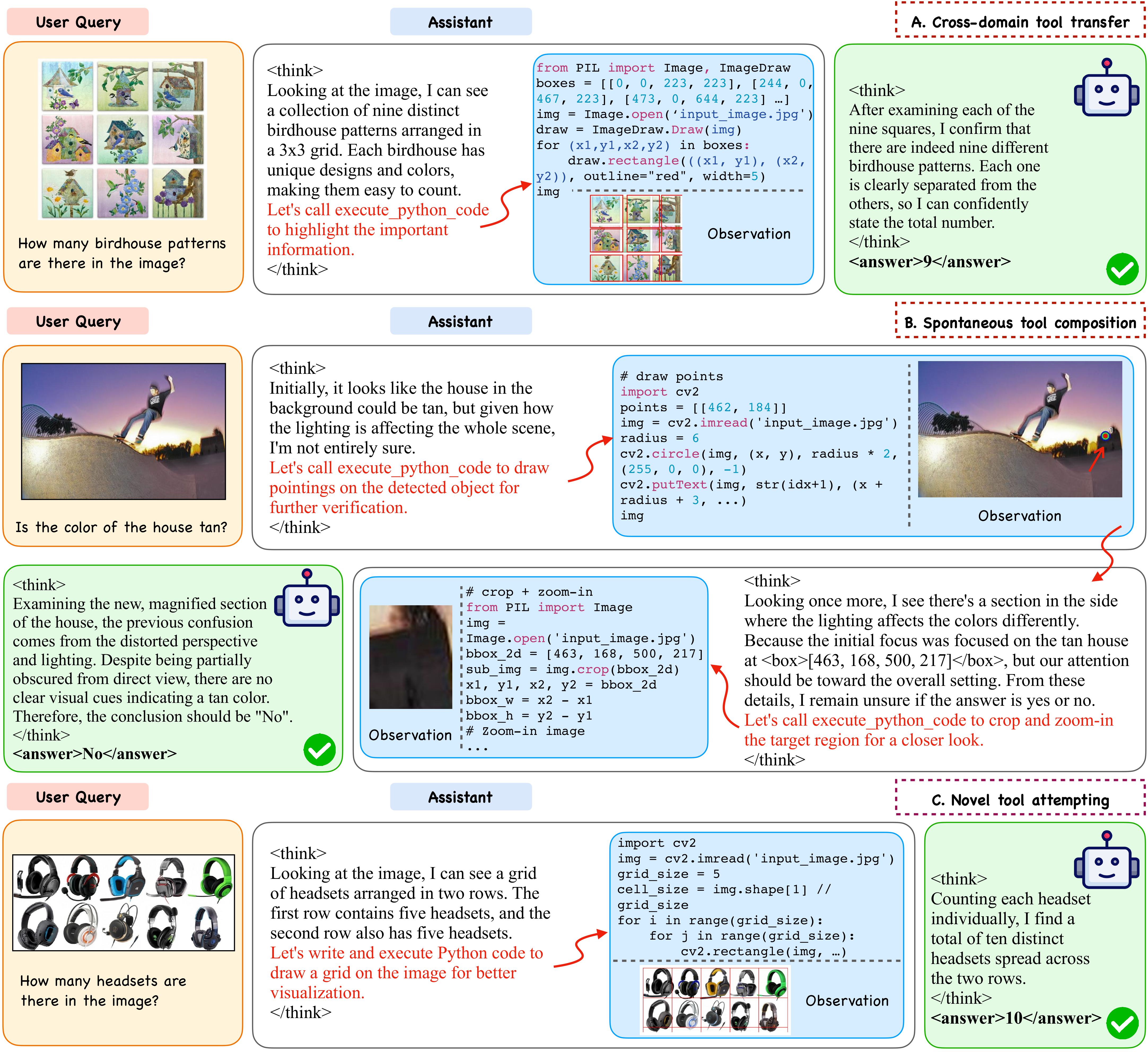

- Open-ended operations. The model can execute arbitrarily complex code that helps solve the task (e.g., drawing, annotation), enabling flexible and task-adaptive tool use.

- Stage 2: Reinforcement Learning.

We optimize with a composite reward that integrates outcome and tool signals. Our two-level reward (Balanced Adaptive Tool-call), denoted as \( R_{\mathrm{BAT}} \), balances task difficulty with step-wise tool-call correctness:

\[ R_{\mathrm{BAT}} = R_{\mathrm{seq}} + R_{\mathrm{turn}}. \]-

Sequence-level. Difficulty-aware incentives adapt to group accuracy to discourage redundant calls on easy problems and promote exploration on hard ones. Formally:

Here, \(N_{\mathrm{succ}}(\tau)\) and \(N_{\mathrm{total}}(\tau)\) denote successful and total tool calls in trajectory \(\tau\). The difficulty factor \(d\) shrinks when the group accuracy \(\mu_{\mathrm{acc}}\) is high (easy queries) and grows when \(\mu_{\mathrm{acc}}\) is low (hard queries), suppressing redundant tool invocations on easy cases while amplifying rewards for helpful tool use on challenging ones.

\[ R_{\mathrm{seq}} = \Big(0.5 + 0.5 \cdot \mathbb{I}_{R_{\mathrm{acc}}(\tau) > 0}\Big) \cdot d \cdot \frac{N_{\mathrm{succ}}(\tau)}{N_{\mathrm{total}}(\tau)}, \quad \] where \[ d = \sigma\!\big(\gamma (0.5 - \mu_{\mathrm{acc}})\big) - \delta, \quad \sigma(z) = \frac{1}{1 + e^{-z}}. \] -

Turn-level. Immediate penalties for failed executions plus a batch-normalized advantage to provide dense correction. For each turn \(m\), failed code execution incurs an immediate penalty \(r_{\mathrm{turn},m} = -0.5\) (otherwise \(0\)). To capture long-term effects, we define the accumulated discounted return and advantage as:

\[ G_{\mathrm{turn}}^{m} = r_{\mathrm{turn}}^{m} + \beta \cdot G_{\mathrm{turn}}^{m+1}, \qquad A_{\mathrm{turn}}^{m} = \frac{G_{\mathrm{turn}}^{m} - \mu_{\mathrm{batch}}}{\sigma_{\mathrm{batch}}}. \]This turn-level signal assigns credit to correct intermediate tool executions, discouraging reward hacking where the final answer is correct but tool use is erroneous.

The final advantage combines sequence- and turn-level signals:

\[ A(\tau) = A_{\mathrm{seq}}\!\big(R_{\mathrm{acc}}, R_{\mathrm{format}}, R_{\mathrm{seq}}\big) + A_{\mathrm{turn}}\!\big(G_{\mathrm{turn}}\big). \]

-

- Stage 3: Test-Time Extend and Scaling. Without task-specific fine-tuning, CodeDance exhibits emergent capabilities beyond supervised primitives: novel tool invocations, unseen tool compositions, and cross-task transfer, demonstrating strong generalization at inference. We additionally validate the potential of scalability after observing the empirial novel reasoning trajecories incentivized during RL.